Yong-Hyun Park

1st year Ph.D@UPenn-CIS. park19@seas.upenn.edu

I’m a researcher who learns by understanding mathematically, mechanistically, from first principles.

Before PhD, all my work has been about understanding: how diffusion models work geometrically, how concepts emerge in generative models. Now I want to move from understanding to building. In the near term, I’m working on improving how we use generative models, making them more capable, controllable, and efficient.

In long run, more ambitiously, I’m exploring a fundamental shift in how these models learn. Current generative models rely on imitation (behavioral cloning), like memorizing chess games without understanding what makes a move good. But I believe goal-conditioned behavior is what makes human intelligence powerful: the ability to reason about objectives and plan toward them over long horizons. Today’s agent system, e.g., LLMs and VLAs, lack this. They excel at local predictions but struggle with sustained, goal-directed reasoning. I’m exploring how to shift toward learning what constitutes progress: moving from imitating next steps to understanding how actions lead to objectives.

These are big questions that can’t be solved alone, and I’m excited to explore them alongside other researchers—the conversations and collaborations are what make this work meaningful.

news

| Feb 06, 2025 | Our paper “Concept-TRAK: Understanding how diffusion models learn concepts through concept attribution” has been accepted at ICLR 2026! (Mentor: Chieh-Hsin (Jesse) Lai) |

|---|---|

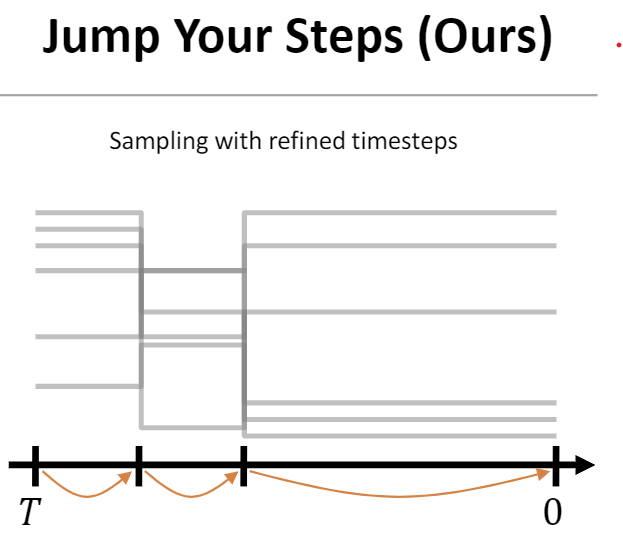

| Mar 11, 2024 | Our paper “Jump Your Steps: Optimizing Sampling Schedule of Discrete Diffusion Models” has been accepted at ICLR 2025! (Mentor: Chieh-Hsin (Jesse) Lai) |

| Jan 22, 2024 | I will be joining UPenn CIS as a Ph.D. student starting Fall 2025, advised by Prof. Jiatao Gu. |

selected publications

- Concept-TRAK: Understanding how diffusion models learn concepts through concept attributionIn The Fourteenth International Conference on Learning Representations, 2026

- Jump Your Steps: Optimizing Sampling Schedule of Discrete Diffusion ModelsIn The Thirteenth International Conference on Learning Representations, 2025

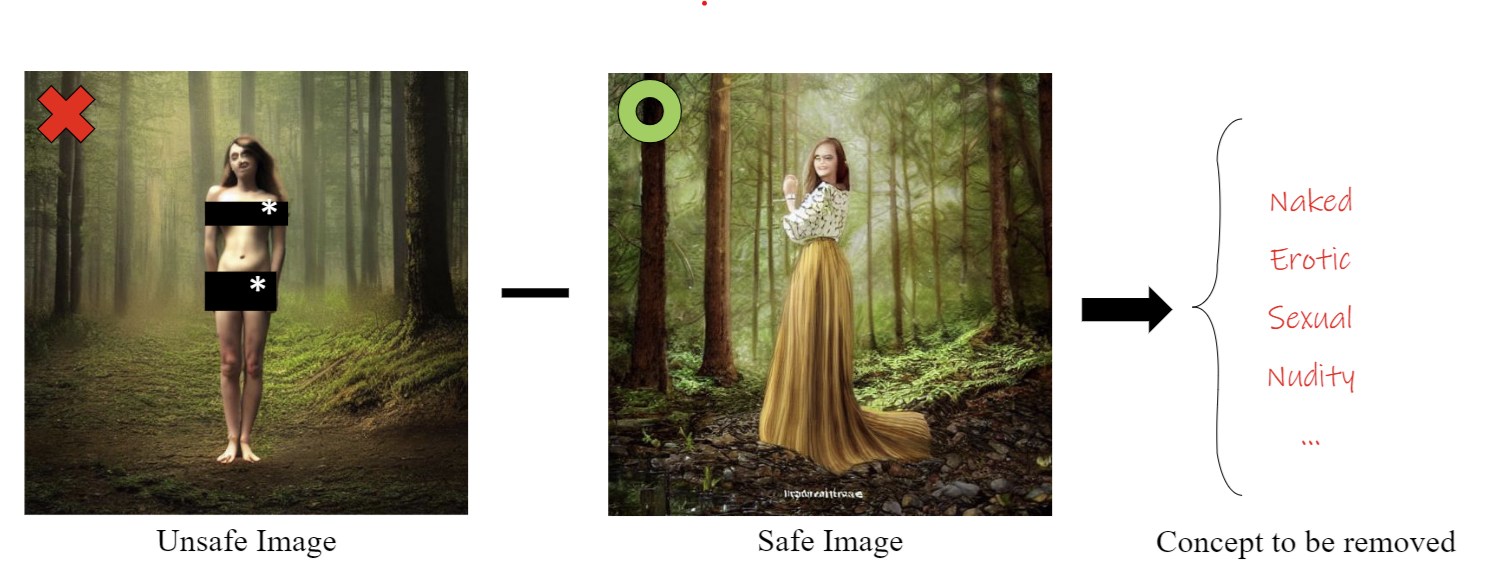

- Direct Unlearning Optimization for Robust and Safe Text-to-Image ModelsIn The Thirty-eighth Annual Conference on Neural Information Processing Systems, 2024

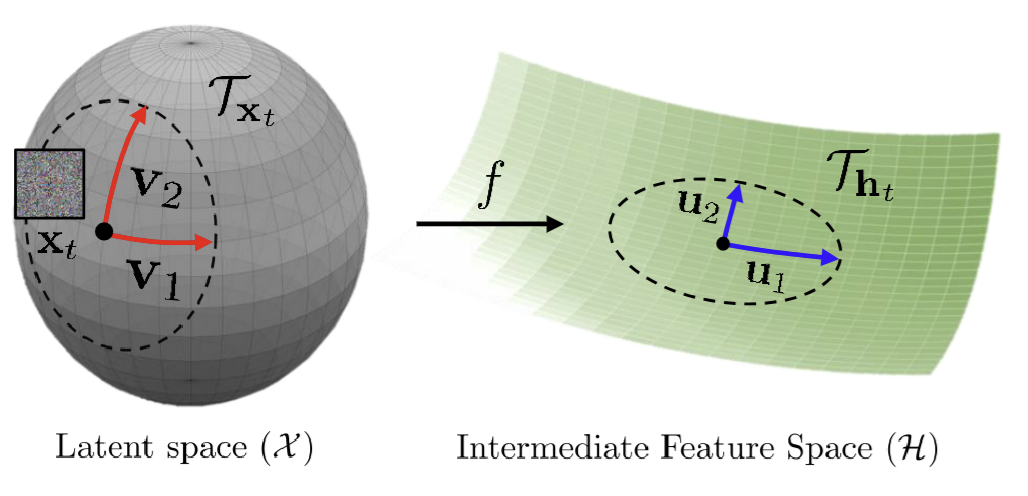

- Understanding the Latent Space of Diffusion Models through the Lens of Riemannian GeometryIn Advances in Neural Information Processing Systems, 2023